Client-Side Bot Detection vs. Server-Side Bot Detection

Alex McConnell, Cybersecurity Content Specialist

Alex McConnell, Cybersecurity Content Specialist

8 minutes read

If you’re shopping around for a way to stop bots damaging your brand – be that through enabling automated fraud, hoarding your stock, scraping your content or prices, or just eating up server resources – you’ve probably seen vendors advocating the use of “client-side detection”.

They’re probably telling you it’s necessary for accurate bot detection. They might say that their code is obfuscated, that attackers can’t possibly detect their defensive strategies. And even if they can, the code can’t be deciphered to bypass their tools. They’ll even try to tell you it’s quick and easy to install and maintain across your apps and websites.

What they’re not telling you is that using any amount of client-side detection leaves your defenses exposed to adversaries, that it saps time for your teams to maintain – and that there is a better, more secure, and more accurate way to detect bots – by exclusively using server-side detection.

Client-Side Bot Detection vs. Server-Side Bot Detection

While client-side bot detection involves installing a code, server-side bot detection is able to detect bot activity without the use of additional codes and systems. This allows for more efficient monitoring of visitor requests, which not only works to reduce the number of bots accessing your site but also makes for a more positive user experience for genuine visitors. Implementing server-side bot detection with Netacea allows site owners to streamline their bot detection solutions without the use of complex code.

What is client-side bot detection?

Vendors who rely on client-side bot detection will require you to install code – usually a piece of JavaScript – on the pages you need to protect. For mobile applications, this would typically be an SDK. This code passes to the visitor’s client (in most cases the browser they are visiting your site from) and returns a response to the bot detection solution.

This code presents challenges to any visitor, which the visitor must pass to prove their humanity before the code allows the rest of the page to load. These challenges could be based on human-like mouse gestures, typing speed, and even the device’s physical position from its accelerometer. They’re also based on browser and device fingerprints – supposedly unique identifiers based on the hardware and software of the client.

How do adversaries bypass client-side bot detection?

A major weakness of client-side bot detection is that it puts your defensive playbook into the hands of your attackers. All the signals that you’re looking for when trying to determine whether a visitor is human or bot are visible to anyone who knows where to look – meaning all those signals can be precisely spoofed to bypass your defenses.

Vendors who use client-side detection will usually obfuscate their code – scrambling it so it isn’t readable by humans – in an attempt to hide their detection signals. However, we have evidence that adversaries routinely use easily accessible tools to de-obfuscate code automatically, rendering the vendors’ efforts futile.

Attackers are financially motivated and highly skilled, so giving them any opportunity to decipher code is unlikely to end well for the target organization.

Also, browser and device fingerprints are becoming less relevant as society shuns user surveillance in favor of enhanced privacy. There’s also a burgeoning criminal economy of spoofed and stolen device fingerprints being traded on the dark web via sites like the Genesis Market, so even if a fingerprint looks human, it could easily be a bot or malicious actor masquerading as the original user.

On-demand webinar: "How Bots Win: Bot Defense Bypass Techniques"

What is server-side bot detection?

In contrast to client-side bot detection, server-side bot detection analyzes the web log data that all servers generate as visitors make requests. This means there is no requirement to install any additional code to websites or mobile applications to detect bot activity – server-side solutions just need to be given access to this data.

As you can imagine, web logs contain masses of data. In fact, at Netacea we ingested and analyzed over a trillion web requests in 2022. Having this amount of data available to us is a huge advantage in training our machine learning algorithms. The algorithms use pattern recognition, anomaly detection and clustering to compare incoming traffic with similar requests and determine its intent – malicious or benign – in real time (alongside expert data analysis and backed up by adversarial tactics uncovered by our threat research team).

This is far more effective than looking at individual requests in isolation, especially when the signals of individual requests can easily be spoofed. When a large number of signals act a certain way, our AI-powered detection modules can very quickly spot patterns and outliers, even in highly distributed attacks or those originating from trusted residential proxies.

Also, because server-side bot detection tools like Netacea don’t interact with the visitor’s client, we are totally invisible to attackers. We don’t have to waste resources figuring out new ways to scramble our code so attackers can’t bypass our detection – because there is no code to de-obfuscate, and no signals to decipher to begin with.

Unlike our competitors who rely on client-side code, our threat researchers have never once come across an adversary that’s determined Netacea is protecting a particular organization’s applications, let alone published bypass techniques targeting our solution on the open or dark web.

Server-side bot detection protects everything, even APIs

Client-side bot protection must be configured to protect each page or path you think might be hit by bot traffic, with a different implementation per website and app. This is inconvenient and messy, and leaves out a crucial attack vector increasingly targeted by bots: API endpoints.

Because APIs are designed to be interacted with by applications, not humans, it makes sense that malicious bots also use them as an entry point for attacks. This also means that client-based detection systems looking for human behavior are simply not suited to detecting bots that target APIs.

On the other hand, server-side detection sees every HTTP request, no matter whether it comes via a website, mobile application or API. Netacea has the same visibility via one integration of all web-based endpoints, including every path, every mobile app screen, and even APIs, as standard.

Why not have a blend of both client and server-side bot detection?

Some vendors use both client-side and server-side bot detection in combination to increase the number of signals at their disposal and improve the accuracy of their recommendations. However, the mere presence of client-side code is a risk on several fronts.

Firstly, since we know attackers can de-obfuscate client-side code and spoof signals they send back, it’s impossible to trust the results of client-side detection. Netacea often goes head-to-head with bot management tools that use client-side detection, and in some cases verifies as many as 30 times more malicious traffic. It’s likely that a chunk of this traffic has simply bypassed client-side detection, or even slips in via paths or endpoints that the code isn’t even installed on. Any amount of client-side detection is a liability to accurate bot protection.

Secondly, client-side code is more complicated than you might think to install and maintain. Most web teams will demand that you test any changes to the site’s code base, which takes time and resource. And since bots are constantly changing tactics to evade detection or attack new endpoints, bot detection tools must keep pace via regular updates – causing a constant recurring cycle of JavaScript and SDK revisions, plus testing, to maintain your protection against bots. Conversely, server-side detection is updated on the vendor side, so there is literally no effort required from you to maintain up-to-date protection.

Bot protection vendors who do rely on any client-side protection, even alongside their own server-side detection, claim it’s necessary to provide accurate recommendations. Some even argue that turning off client-side protection significantly reduces the number of bots detected.

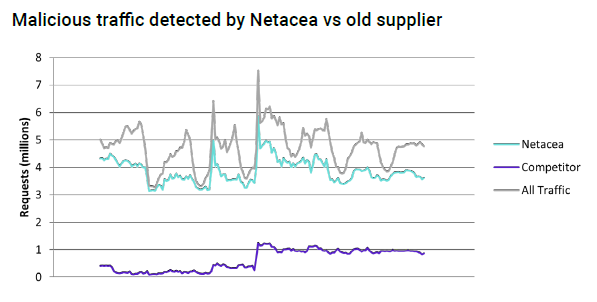

In this recent example, Netacea caught six times more bots on a client’s website using just server-side detection than a competitor did using client-side detection.

This might be true for them because they were designed to depend on client signals and have been playing catch-up with bots for years. But Netacea was built to detect bots solely from server signals from day one, meaning our more advanced machine learning-based bot detection technology routinely out-performs client-side or hybrid tools – and we have the data to prove it.

Make the switch to server-side bot detection

Putting Netacea’s server-based detection to the test against solutions still reliant on client-side code is a simple process. Because we only need to see web log data from your server, all it takes is a simple, unobtrusive API connection for us to start collecting the data you need – you can even send us historical data logs to compare previous recommendations offline.

Find out more about putting Netacea to the test with a free demo and POC.

Subscribe and stay updated

Insightful articles, data-driven research, and more cyber security focussed content to your inbox every week.

By registering, you confirm that you agree to Netacea's privacy policy.